Machine learning for botanical identification

Nathan Stern, an analytical chemist at Amway / Nutrilite (Ada, USA), specializes in development and validation of various types of chromatographic test methods. He develops both quantitative (UHPLC) and qualitative (HPTLC) phytochemical tests that are designed to ensure ingredient quality and authenticity. He is also experienced in using machine learning techniques, especially as they apply to analytical chemistry.

Introduction

The primary goal of Nathan’s research was to develop a machine learning system to automate the evaluation of HPTLC-generated botanical fingerprints for the determination of botanical identity. The current industry approach involves manually comparing these HPTLC images with botanical reference materials of both authentic species and common adulterants. Machine learning techniques, such as machine vision, can enable faster and more accurate identification of botanicals.

The developed machine vision system has demonstrated high accuracy in correctly identifying Ginger and its closely related species or adulterants. It can evaluate and classify the correct species for any number of images in only a few seconds, significantly reducing analyst workload and enhancing confidence in botanical identification. Additionally, this software system was validated using two different approaches, showing that it is both accurate and robust.

HPTLC Image Data

All HPTLC images were obtained from the online and publicly available HPTLC Association Atlas repository. This includes 77 total image files for the following species: Alpinia officinarum, Boesenbergia rotunda, Kaempferia galanga, Kaempferia parviflora, Zingiber montanum, Zingiber officinale, and Zingiber zerumbet [2].

Sample preparation

To 1.0 g of each powdered sample 10 mL of methanol are added, followed by 10 minutes of sonication. The samples are centrifuged, and the supernatant is used as test solution [2].

Chromatogram layer

HPTLC plates silica gel 60 F254 (Merck), 20 × 10 cm are used.

Sample application

2.0 μL of sample and standard solutions are applied as bands with the Automatic TLC Sampler (ATS 4), 15 tracks, band length 8.0 mm, distance from the left edge 20.0 mm, track distance 11.4 mm, distance from lower edge 8.0 mm [2].

Chromatography

Plates are developed in the Automatic Developing Chamber (ADC 2) with chamber saturation (with filter paper) for 20 min and after activation for 10 minutes at a relative humidity of 33% using a saturated magnesium chloride solution, development with toluene – ethyl acetate 3:1 (V/V) to the migration distance of 70 mm (from lower edge), followed by drying for 5 min [2].

Post-chromatographic derivatization

The plates are derivatized using an anisaldehyde reagent prepared by adding 10 mL of acetic acid, 5 mL of sulfuric acid, and 0.5 mL of anisaldehyde to 85 mL of icecooled methanol. The derivatization reagent (3 mL) is sprayed using the Derivatizer (blue nozzle, level 3). The plates are heated at 100°C for 3 min and allowed to cool before detection [2].

Documentation

Images of the plates are captured with the TLC Visualizer 3 in UV 254 nm, UV 366 nm, and white light after development, and again after derivatization in UV 366 nm and white light.

Machine learning hardware and software

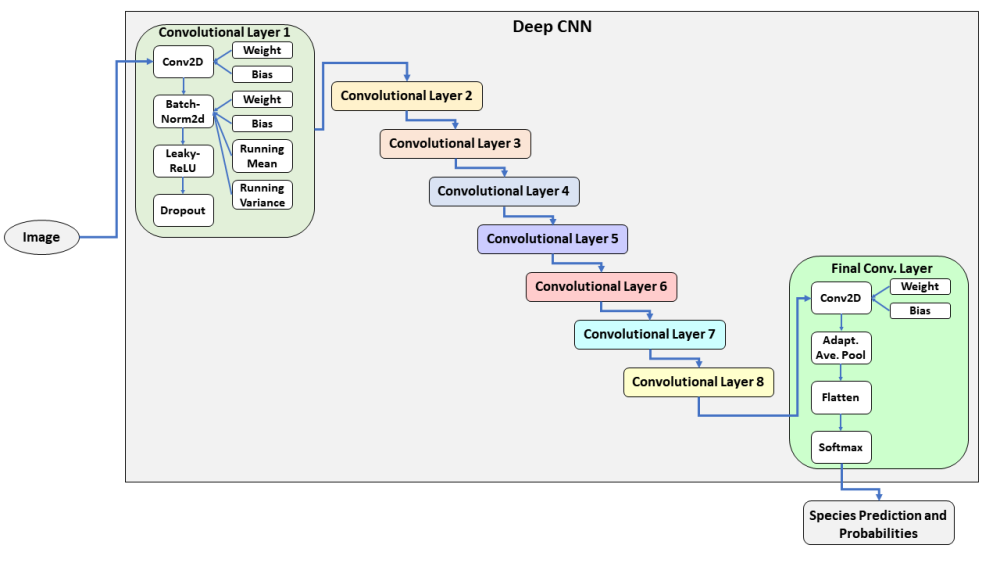

The machine vision model was created on a computer system utilizing an Nvidia GeForce RTX 3070 GPU for computation. The machine learning software system was implemented in Python using Visual Studio Code as the IDE and PyTorch as the machine learning framework. The machine vision system is comprised of several different neural networks, including a deep conditional generative adversarial network (DCGAN) made up of a discriminator and a generator, as well as a deep convolutional neural network (deep CNN).

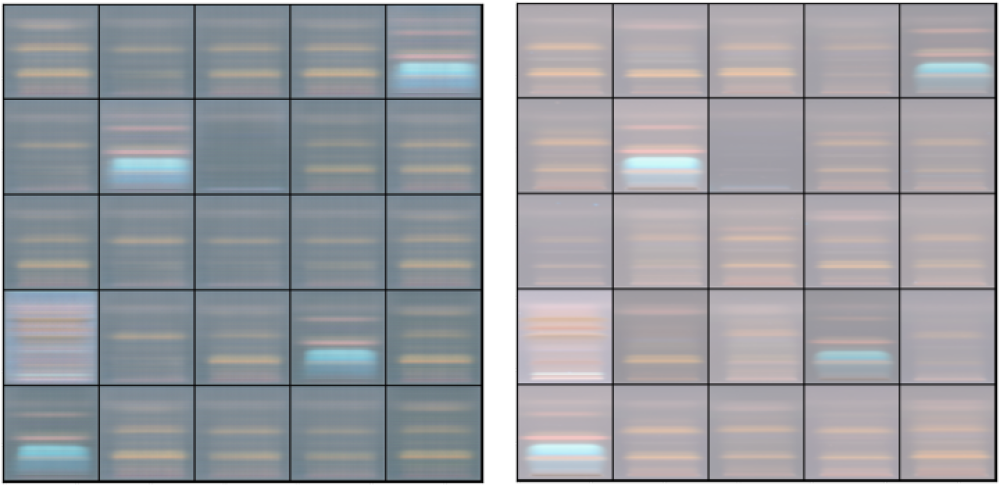

The role of the DCGAN in the system is to augment the limited dataset by creating a large number of synthetic HPTLC images for each species, based on a partition of real HPTLC image data. This synthetic data was then used to train the deep CNN model, which was validated separately against both real and synthetic HPTLC image datasets.

Results and discussion

The machine vision system successfully generated realistic synthetic HPTLC images using DCGAN. These synthetic images were effectively employed to train a deep CNN, which demonstrated a high level of accuracy in botanical species identification.

HPTLC images for each of the evaluated botanical species – Zingiber officinale, Alpinia officinarum, Boesenbergia rotunda, Kaempferia galanga, Kaempferia parviflora, Zingiber montanum, and Zingiber zerumbet –were processed and classified.

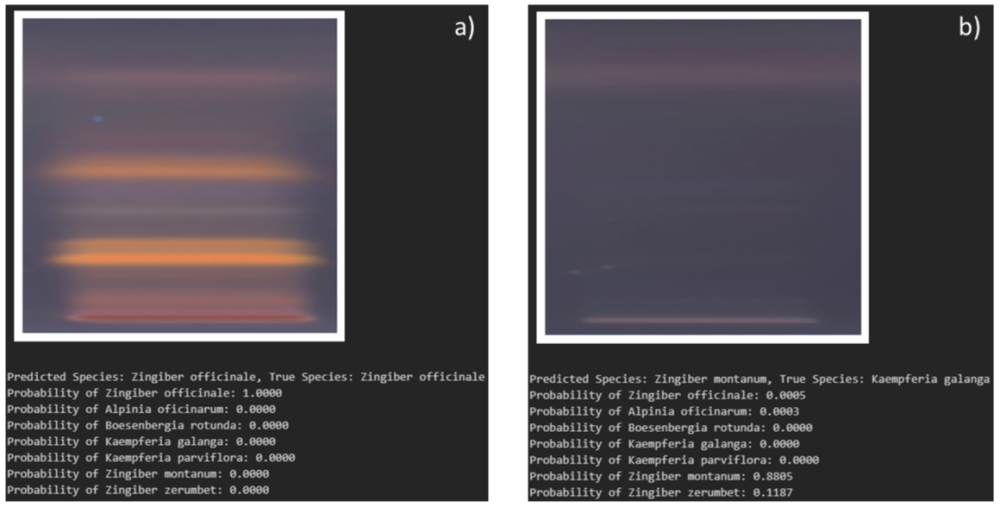

The system demonstrated 98.7 % accuracy when tested on real HPTLC images, correctly classifying 76 out of 77 botanical samples. The only misclassified image was identified as Zingiber montanum instead of Kaempferia galanga.

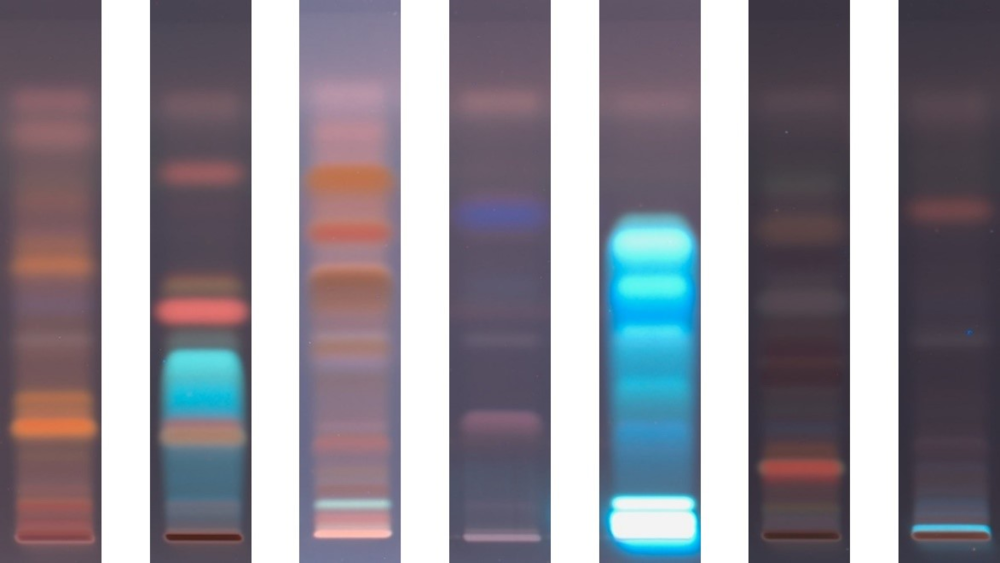

Cropped, representative HPTLC images for each of the species that were evaluated for the machine learning system. From left to right: Zingiber officinale, Alpinia officinarum, Boesenbergia rotunda, Kaempferia galanga, Kaempferia parviflora, Zingiber montanum, and Zingiber zerumbet [1]

High-level overview of the general architecture of a deep CNN, similar to that used to create the botanical ID machine vision model [1].

Example of synthetic data as created by the DCGAN. Top image: 5×5 matrix of 25 DCGAN generated synthetic images; bottom image: 5×5 matrix of real HPTLC images [1].

Examples of classification and probability outputs as provided by the botanical ID machine learning system [1].

The synthetic dataset accuracy was recorded at 100 %, indicating that the CNN was capable of effectively learning and distinguishing features within the controlled dataset. To further validate system robustness, an additional test using a held-out validation dataset of real HPTLC images achieved 97.3 % classification accuracy.

Comparison between real and synthetic HPTLC images confirmed the high fidelity of the generated data. The system’s predictive capabilities were also assessed by outputting species probability scores for each classification, providing an additional measure of confidence in the machine vision model’s results.

Overall, this automated system demonstrated significant improvements in speed and accuracy for botanical species identification compared to traditional manual analysis methods, eliminating human subjectivity while maintaining high reliability.

Literature

[1] Stern N, Leidig J, Wolffe G. Proof of Concept: Autonomous Machine Vision Software for Botanical Identification. J AOAC Int. 2024 Nov 19. DOI: 10.1093/jaoacint/qsae091

[2] HPTLC Association, The International Atlas for Identification of Herbal Drugs, https://www.hptlc-association.org/atlas/hptlc-atlas.cfm

Contact: Nathan Stern, Sciences Department – Innovation and Science, Amway Corp, 7575 Fulton St E, Ada, MI, USA, nathan.stern@amway.com